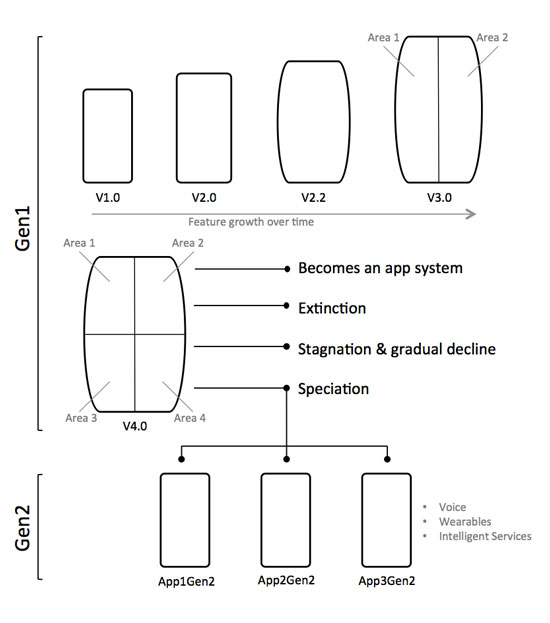

We began working on IoT and security camera applications in 2002. Over the years we have made some observations that have become our development values.

Intelligent

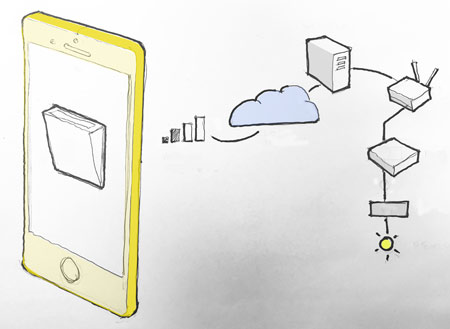

An app is only part of an IoT system. It is the part of the system that the user touches and it is often the only way that the users experience the system. Behind the App screen there are appliances, cloud services, computation, storage, API’s, connectivity, time, etc. All of these elements combine to give the end user an intelligent effect and control rendered seamlessly in the mobile app. We think of the intelligence of the app in the context of the whole system performance.

Comprehensible

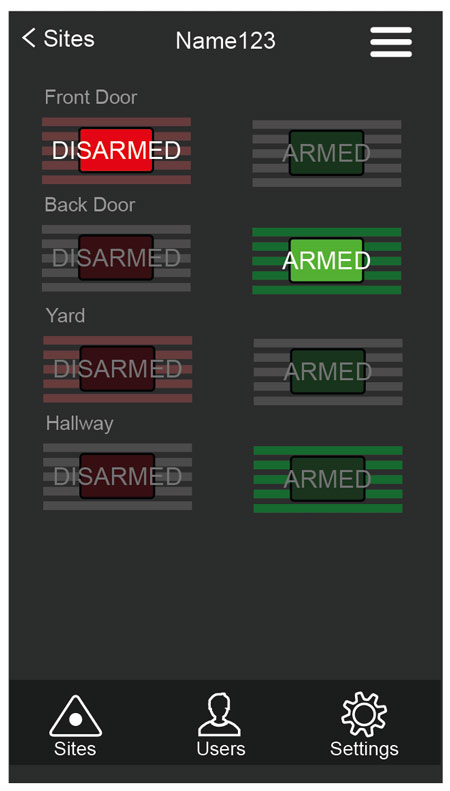

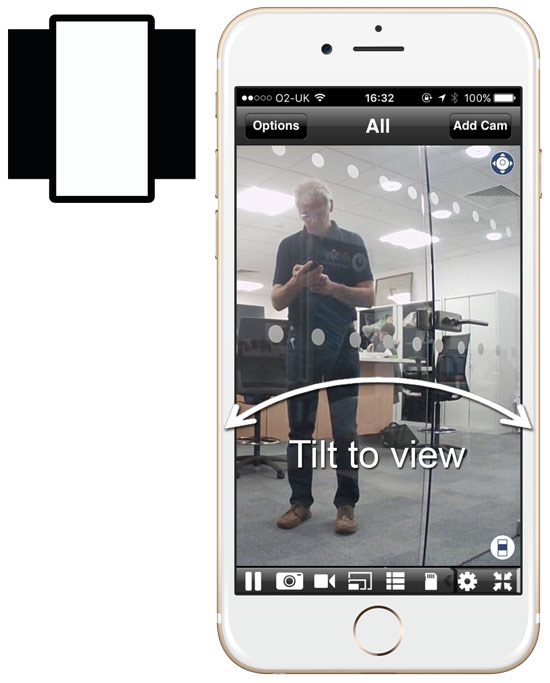

A powerful complex app cannot always be simple and easy to use but it must be comprehensible. IoT apps can be hard to understand because the app provides just a limited window on a partially hidden system. IoT apps often involve actions that occur automatically. This can disorientate a user. We work hard to make unavoidably complex merged physical and computational environments comprehensible.

Agency

Apps can be agents working on your behalf. The user interface is a place where you set up rules and adjust preferences. Once set up the app should run semi independently. When something happens in the system that requires human attention the app should bring the issue to the user’s attention setting out the causes and possible remedies.

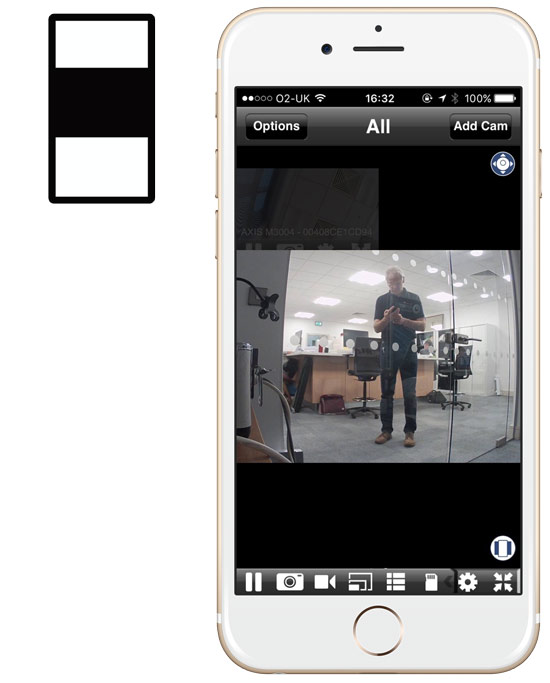

Aware

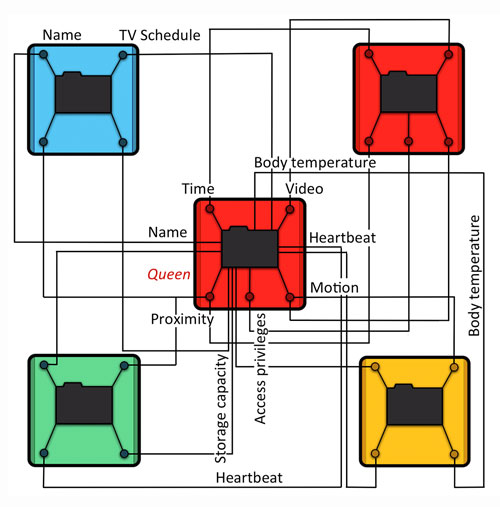

An app should remember what you did in a particular context – and help you achieve that outcome again if the context is similar

Secure

We stand over all our code. There are no black boxes of unknown code components. All storage is encrypted. All communications are encrypted and authenticated.

Fast

We count the clicks. Each click is valued. The UI time is measured. The server and appliance response times are also measured. We indicate the total process expected and elapsed time. Communicating what is expected and when it is expected is part of making the app experience comprehensible.

Reliable

100% reliability is not possible even if the system is as simple as a kitchen light switch. Reporting, mitigation and notification are all strategies that we use to help manage reliability failures in complex IoT systems.

Authenticated

There are competing forces. It is critical to know who is doing what and that permissions are appropriate and manageable. It is also critical for the user to know that the system is secure, true and responsive. Competing against that is the chance that security layers are over burdensome for activities that don’t need to be secure. We get the balance right.

Also see Navihedrons