I met Roy Stringer at an academic conference in Glasgow School of Art in ~1998. He was there talking about his Navihedron concept (It was a co-incidental meeting, I was at the conference speaking about a different subject). Something about Roy Stringer and his Navihedron idea made me remember it all this time.

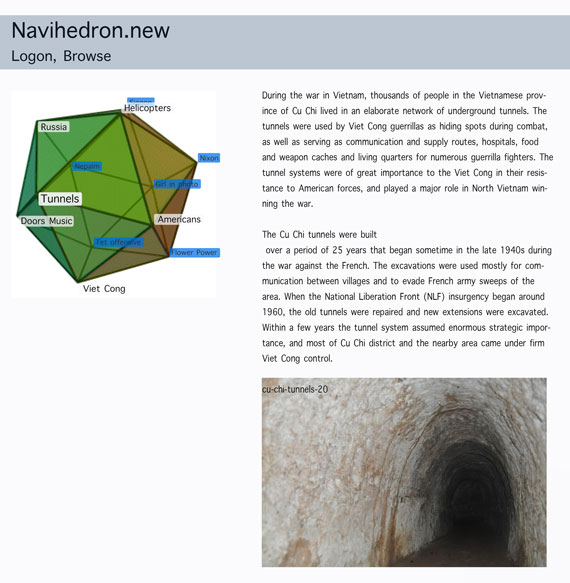

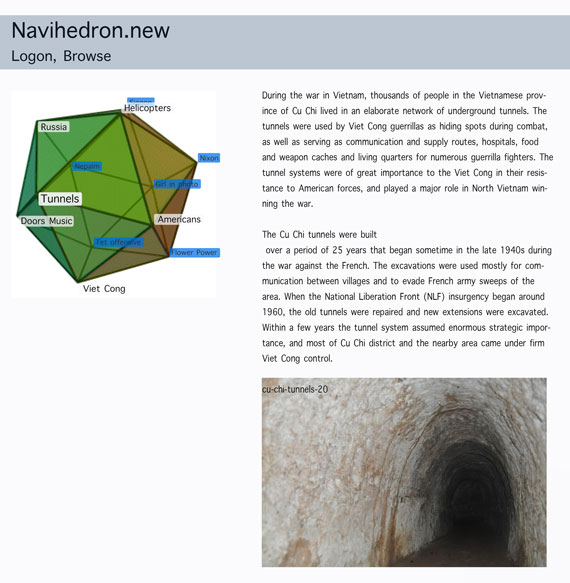

Stringer’s idea was that an icosahedron (12 vertices’s, 20 edges, 30 surfaces) could be effectively used as a navigation system to present non linear content. Each point of the icosahedron would be a subject heading. Each point can be clicked. Two things happen when a point is clicked; 1. The Navihedron animates bringing the clicked point to the frontal position, 2. content relevant to the clicked point subject heading appears in a frame or content area located adjacent to the Navihedron.

It is a very pleasing effect. The animation is engaging. The viewer can revolve the Navihedron exploring the 12 points. Each point has five geometrically related points that offer the next step in the story. The viewer can create their own path through the story. It allows users to browse in a natural way and yet remain within a cohesive story. In comparison linear presentations seem rather boring. The key to it may be that the reader can select their own entry point rather than the start of a linear story. They can click on the point they are interested in. That point is the start to their story. Once they have selected their start they can control their own story rather than being directed through numbered linear pages.

When Stringer presented the Navihedron in 1998 a user could sign on to Navihedra.com and build their own Navihedron and edit the headings. The idea was that a finished Navihedron could be exported and then used in a stand alone website.

Stringer used a physical model of the Navihedron using plastic tubes held together with elastic. Any subject could be explored using the model as a tool and prompt. I have made a few of these over the years and they are a great three dimensional connection between information and space.

Roy Stringer showing opposite points. Image credit: https://katiesvlog.blogs.com/vlog/2006/03/ted_nelson_at_t.html

Stringer felt that the Navihedron might be useful to enable students to put together a story about a subject they were studying. As an example of this I made a sketch showing a Navihedron in the context of a school history class about the Vietnam war. It is not a linear presentation of history – you can enter from any point of interest.

Authors sketch of a Non Linear history lesson style Navihedron

The Navihedron idea has had a wide reach. For example Andy Patrick formerly of Adjacency recalls Stringers influence in the Design of the early years of dot.com ecommerce sites including the Apple store.

Stringer was inspired by Ted Nelson who in 1965 first used the term “hypertext” to describe:

“nonsequential text, in which a reader was not constrained to read in any particular order, but could follow links and delve into the original document from a short quotation”.

Nelson says that HTML is what they were trying to prevent in his project XANADU. “HTML has “ever-breaking links, links going outward only, quotes you can’t follow to their origins, no version management, no rights management”.

Here is the brilliant, thoughtful, Ted Nelson talking about the structure of computing, ZANADU and Transclusion.

(En passant: don’t miss these great Ted Nelson one liners)

Roy Stringer sadly died very young in 2000. Although his work had huge impact, somehow, Stringers Navihedron Site has disappeared from the web, actually it has fallen victim to what Nelson quips as a world of “ever-breaking links”. In fact I could not find any working Navihedron.

Image Credit: The front page for access to Navihedra.com from Alan Levine‘s memories of Roy Stringer: https://cogdogblog.com/

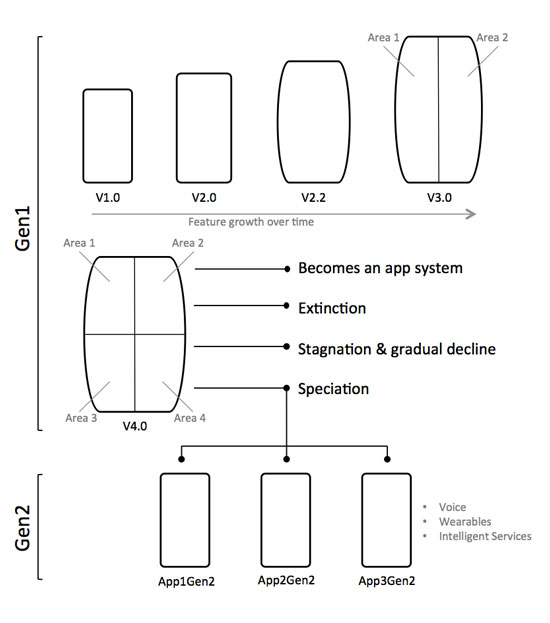

Perhaps there is something in Stringers Navihedron work that has been superseded by the all pervading paradigm of web based navigation. The menu at the top, sub menu down the side or dropping down, style website (this one included) has become dominant.

Daniel Brown of Amaze (the company Stringer founded) helped to develop the Navihedra site and he points out that Stringer’s original Navihedron was built using Shockwave. That technology has now been largely replaced by CSS and Java Script.

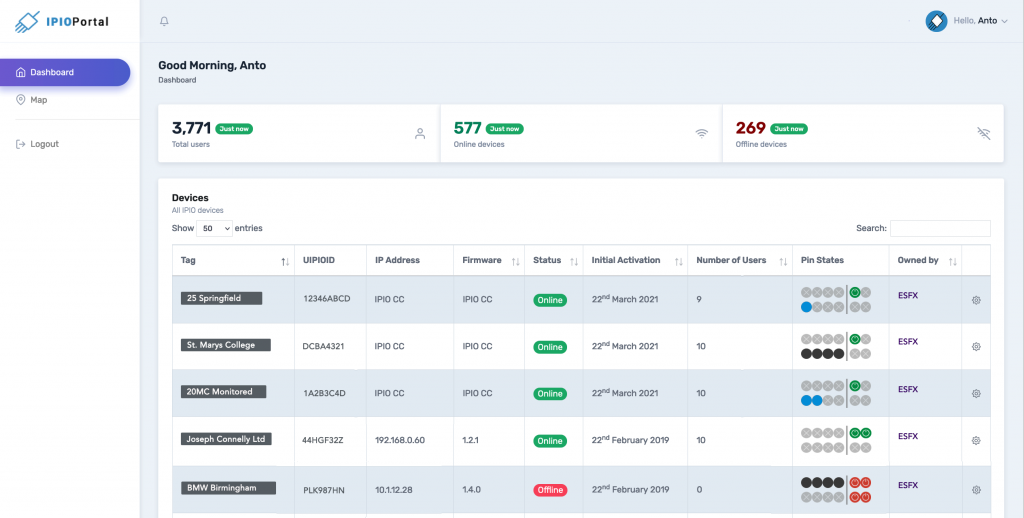

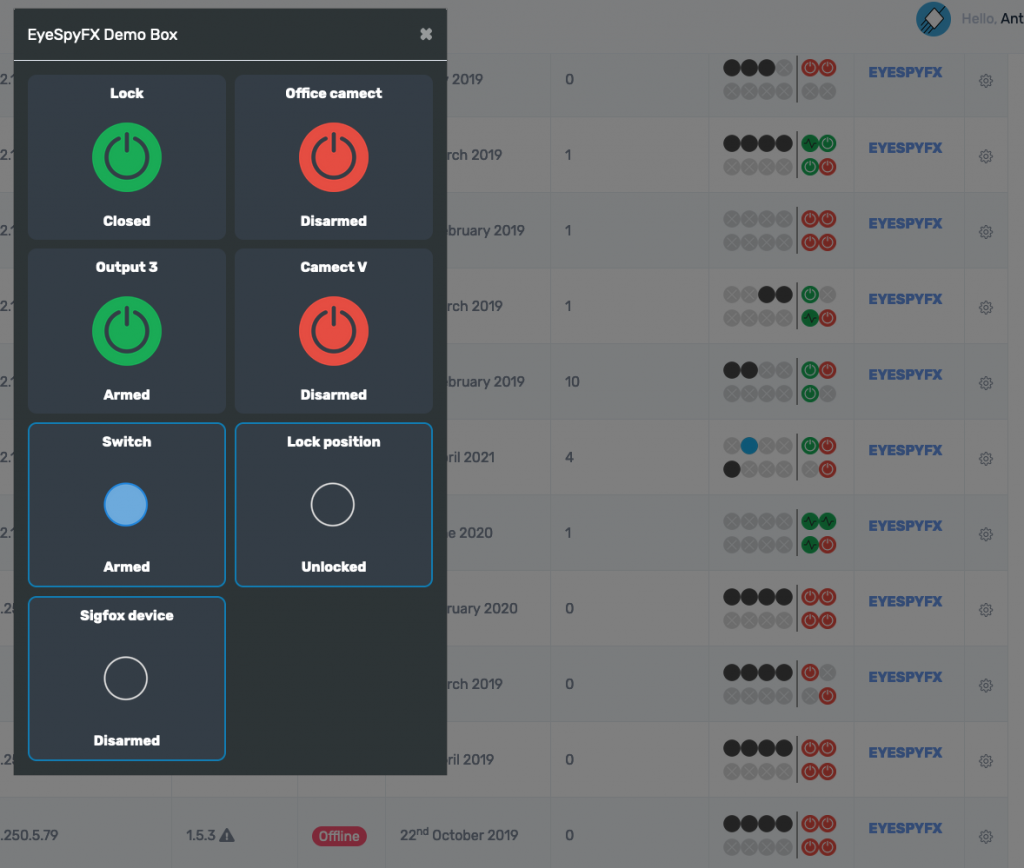

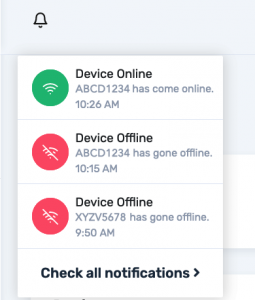

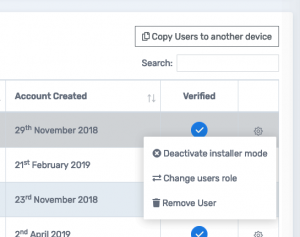

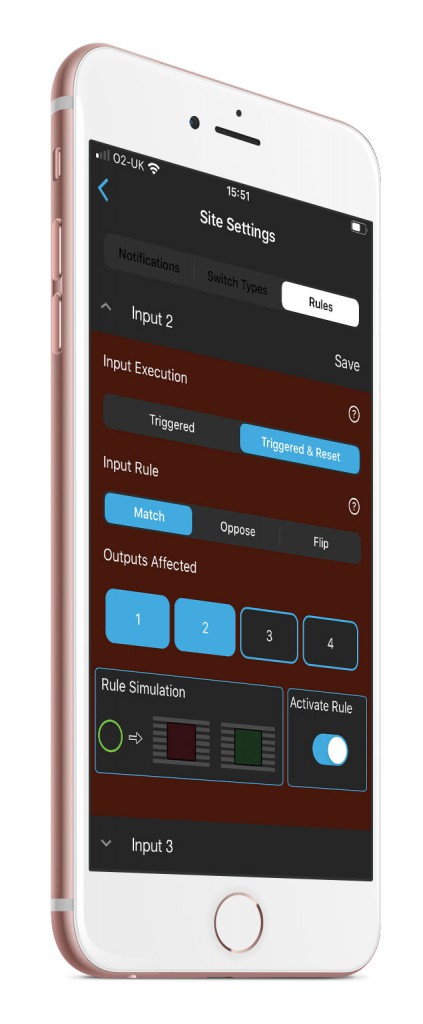

In EyeSpyFX we set out to see if we could rebuild a Navihedron using modern web friendly technologies. The Navihedron you see here is a cube and not an icosahedron as Stringer originally proposed – but the storytelling concept is essentially the same. We built it driven by curiosity really – just to see if we could do it. It is not quete performing as we intend but I think it is a good start (controllable and animated if viewing on a PC (browser dependent), just animated if viewing on Mobile). We would like to build more and in time develop it into a fully editable system for creating Navihedrons – we are searching for the commercial reason to push it forward. The Navihedron concept is intriguing – perhaps a lost web treasure. Fully implementing a Navihedron using web technologies is surprisingly difficult– it is going to be a long term project. This blog article and demo is just a step in the general direction.