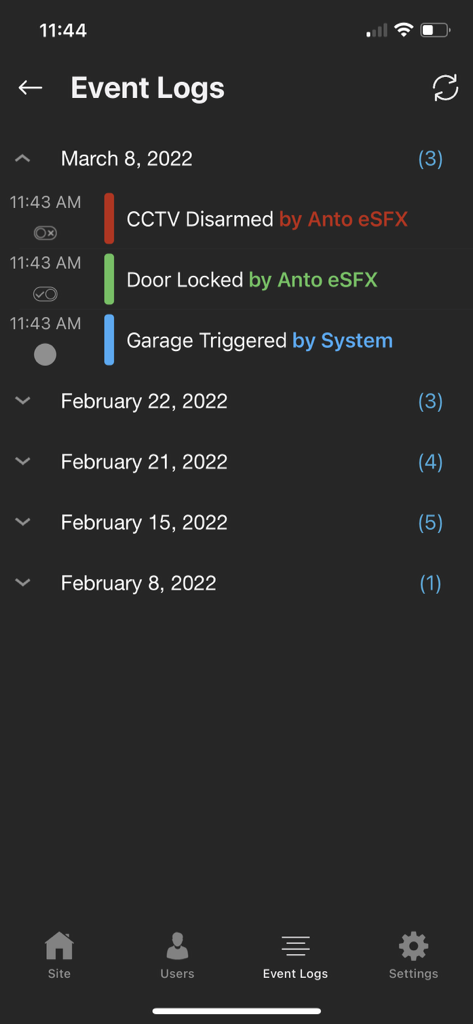

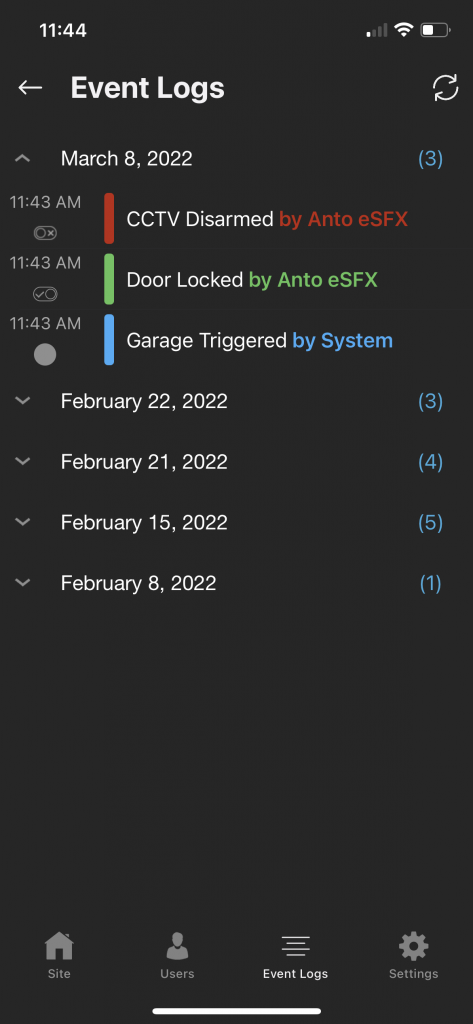

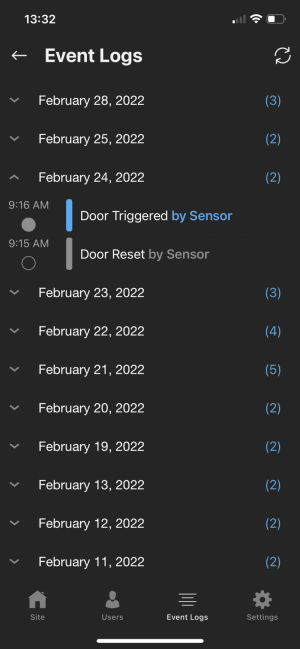

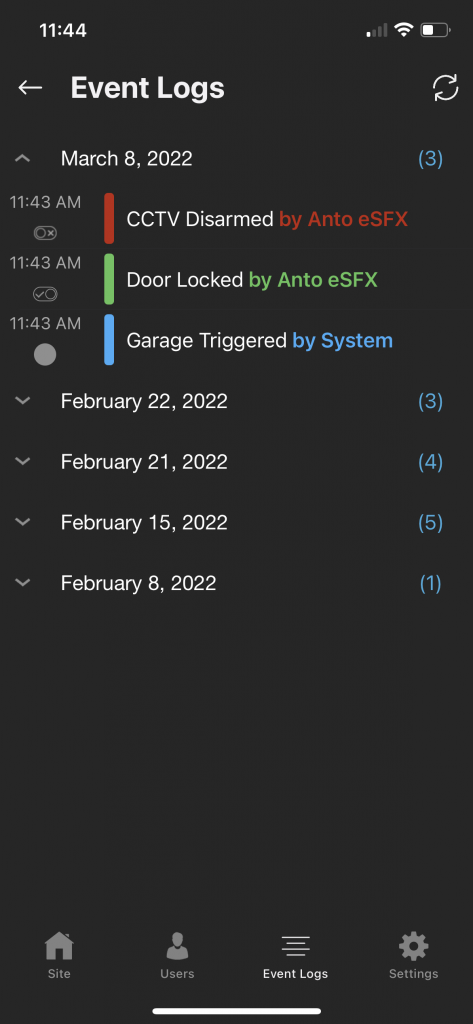

Event logs in Security Camera and Access Control Systems are notoriously hard to read. There are too many events, the individual logs are poorly phrased and badly parsed, you need to wade through lots of data that has no real information value to find the item you are looking for. Looking through logs on a mobile device is even more difficult as the length of the line is longer than the width of the screen. There are lots of good technical reasons why event logs are complex and the difficulty in reading it all is to some extent unavoidable. In the IPIO app we have tackled this problem and come up a with a design that makes the complexity a little easier to read.

Organised per day and collapsible

We decided that a day was the right unit to look at logs in. There are reasons when you need to look at logs over a week, but they are more extreme and we decided against including the UI overhead of that option. Each day can be easily expanded and collapsed.

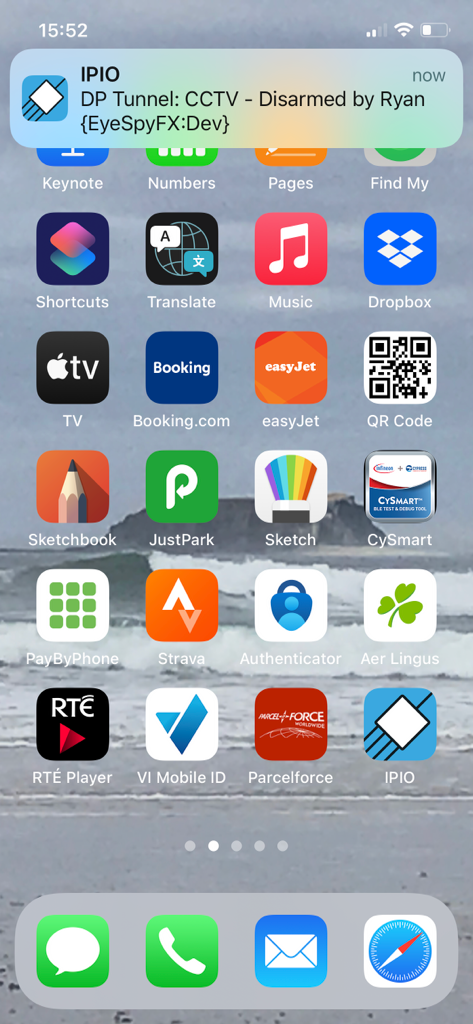

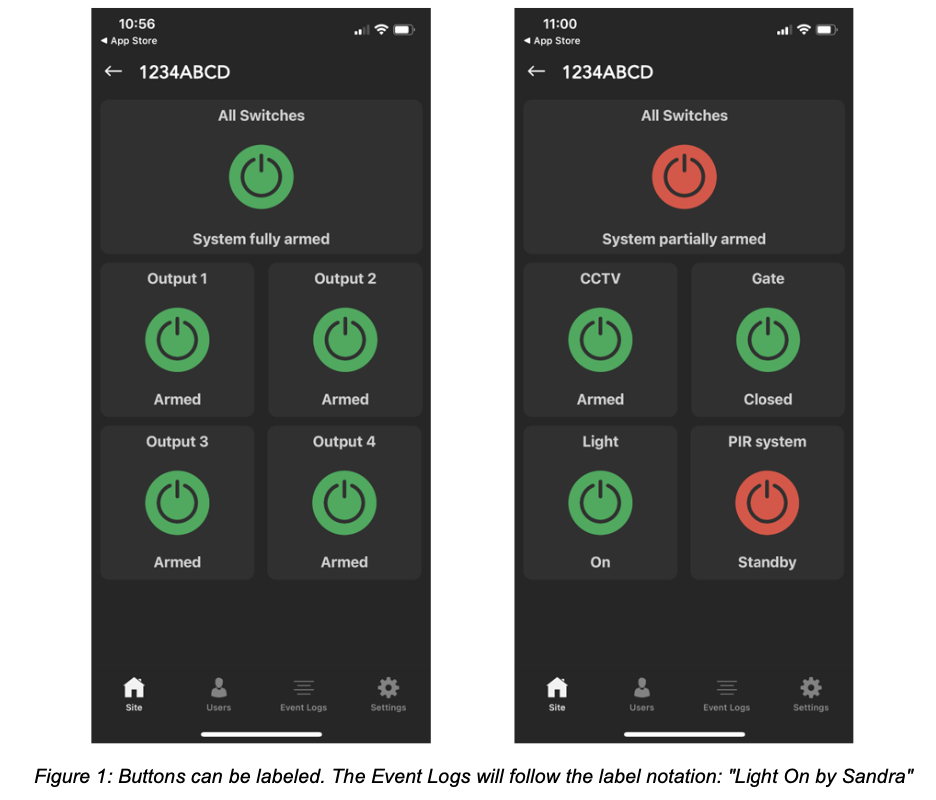

The logs follow the naming preferences the user has set

Every system has the default names it gives to entities such as cameras, doors, sensors, buttons. In many event log systems these names carry through to the logs. This has the advantage of being always true in a system sense but the disadvantage of being difficult to interpret.

For example: Node1 UnSet by UserID AX456.

We decided against that and use the User named buttons and actions.

For example: Kitchen Camera, On, by Joe.

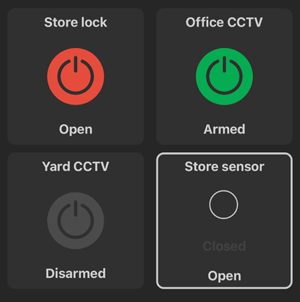

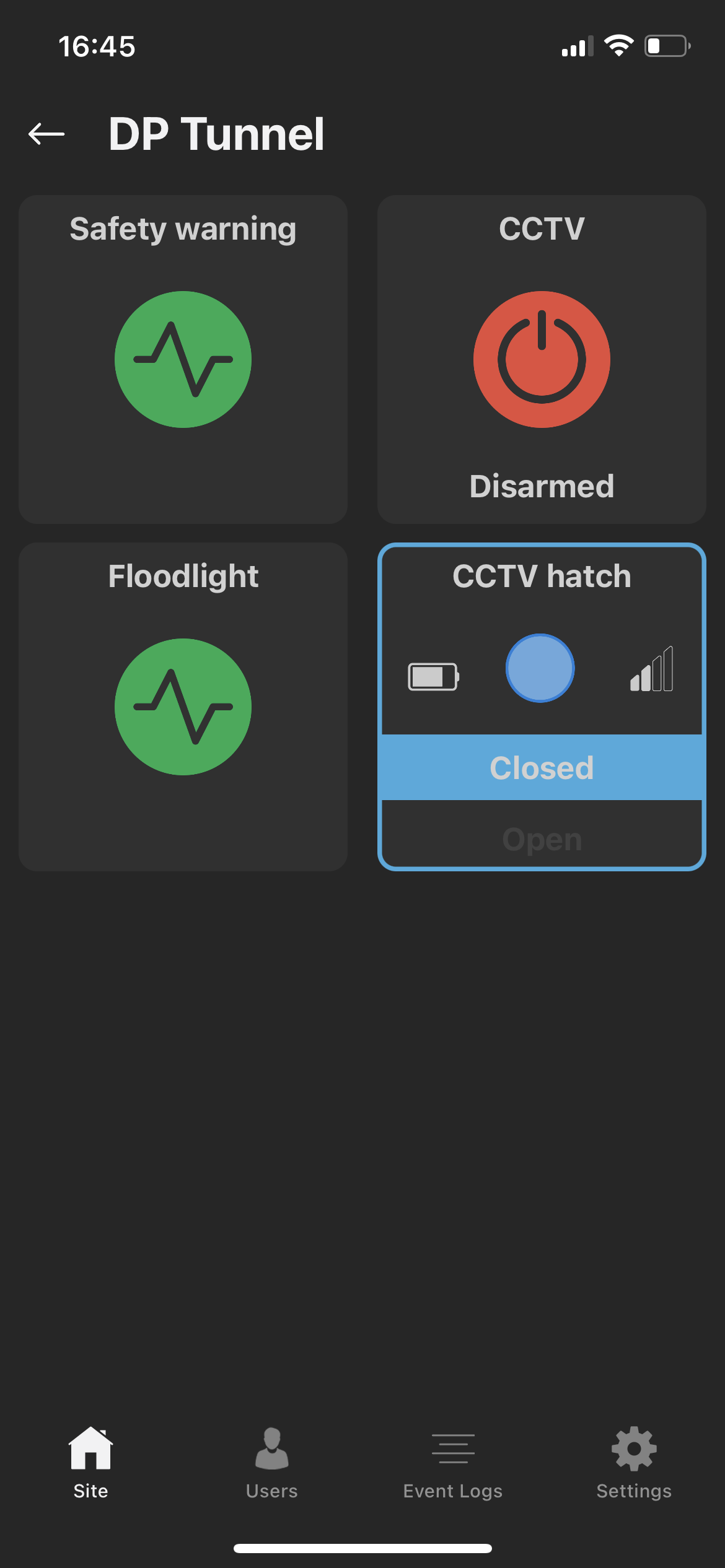

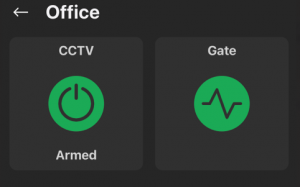

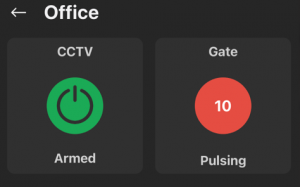

Colour coded

We use colour in a way that increases legibility. Green indicates armed, red indicates disarmed, blue indicates a sensor reset and white indicates a trigger event.

Short

Our log entries are as short as possible. We cannot always manage to get the whole event log into just one line and need to carriage return but we try and keep the event log both meaningful and as short as possible.

Time of day is parsed as stand out feature

We use time as the main index within the day view. Event times are parsed out and made clear and stand alone.

These careful design considerations make IPIO logs easy and fast to read, improving the experience for the user and also helping the process of enabling the user to use the data as information that people can base action on.

IPIO Event Logs